3D Interaction

- Whole-hand Interaction

- Interactive Secondary Views: Volumetric Lenses, Windows, and Mirrors for VR (2005-2011)

- 3rd Person Pointing in a Mirror World

- Touch Interfaces for VR and Visualization

- Menus in VR

- User Study of Stereoscopy and Lit Shading

Whole-hand Interaction

Research Assistants: Mores Prachyabrued, Arun Indugula

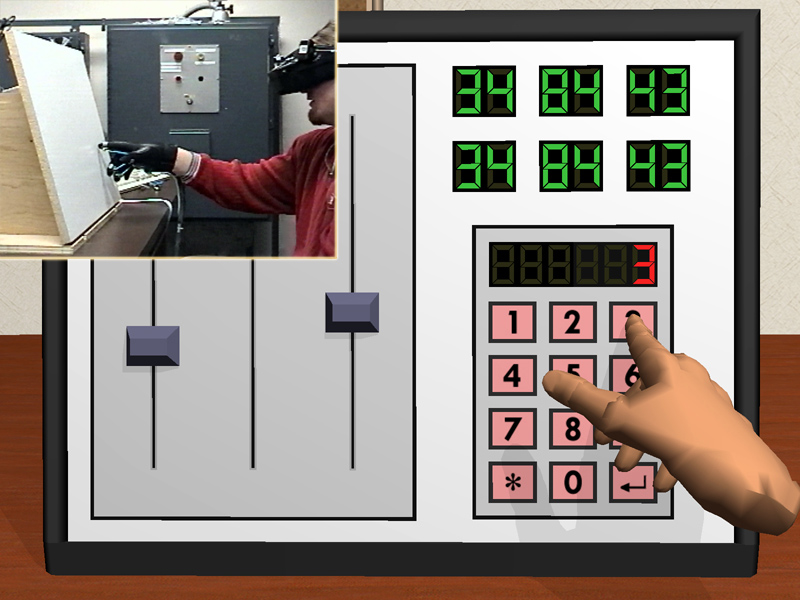

Whole-hand interaction is standard in real-world tasks. So, it is also fundamental in many naturalistic VR applications such as training for manual assembly tasks and ergonomic review of vehicle dashboards. We have studied virtual control panel interaction, virtual grasping based on physical simulation, methods to improve grasp release, visual cues, and associated haptic feedback techniques including physical props and hand-grounded force feedback.

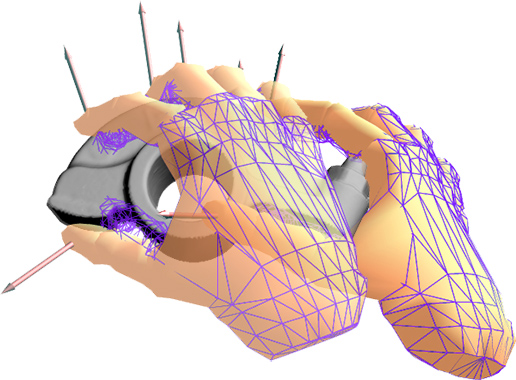

Physical-based grasping model

We developed a physically-based virtual grasping approach, based on spring-dampers connecting the user’s hand to a visual hand model controlled by physical simulation, that produces visually reasonable results and supports force rendering for force-feedback gloves. We later extended this with a grasp release method shown to improve grasp release speed, accuracy, and subjective experience. Shown below is our prototype system for natural whole-hand interactions in a desktop-sized workspace, as presented at IEEE VR 2005.

Grasps of a virtual rocker arm showing tracked hand (mesh), spring hand (solid), and force feedback vectors.

Also see our videos Realistic Virtual Grasping Control Panel and Releasing a Virtual Grasp.

Improvements Based on User Behavior and Subjective Experience

Following our initial grasping method, we focused on experiments to understand grasping difficulties and effects of various techniques in terms of user experience and hand behavior. By better understanding user expectations and deviations from them, we could make grasping methods better align to users.. This led mainly to two directions to improve grasp release: the release mechanism (mentioned above) and optimized visual cues (below). We conducted additional studies to optimize the spring model.

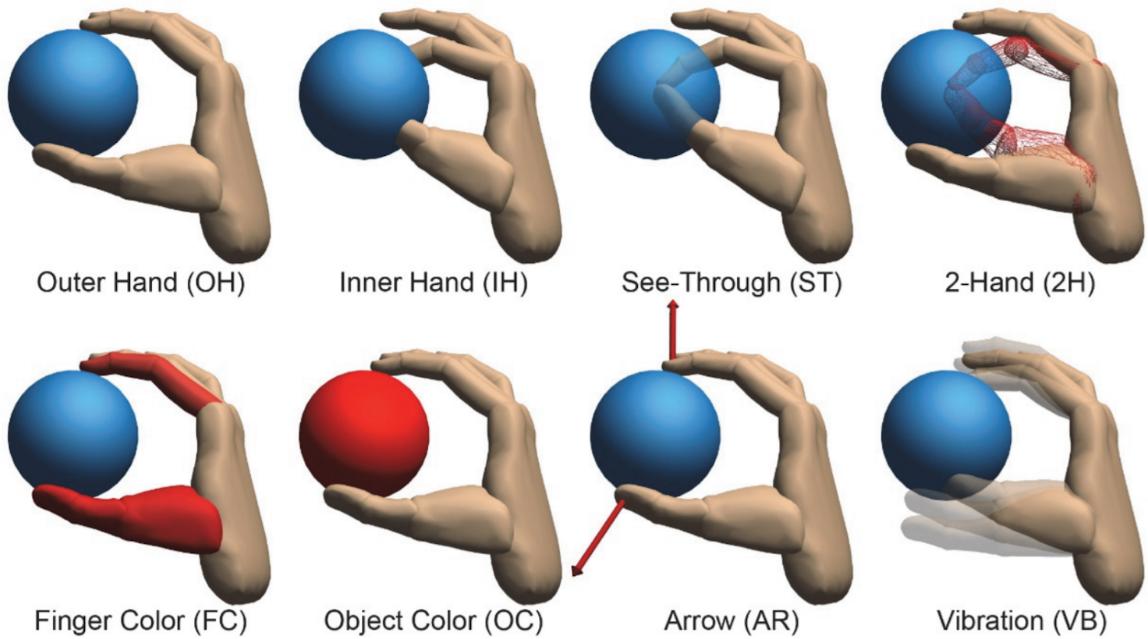

Visual Cues

When users grasp a VR object, especially without physical props or force feedback devices, the real fingers sink into the virtual object, and releasing the object can be difficult. The virtual hand can (optionally) be visually constrained to the bounds of the grasped object while the real hand is not. Prior 3D interaction guidelines stated that visual interpenetration should be avoided for good experience and performance, but the prior experiments did not study articulating hands. In contrast, our own studies of visual penetration found that the standard constrained technique performs poorly and that just drawing the interpenetrating hand improves performance. A deeper investigation of various visuals led to the following updated guideline : Interaction techniques should provide interpenetration cues to help users understand and control interaction. Moreover, for grasping: Subjectively, certain visual cues augmenting constrained visuals are liked. Performance-wise, direct rendering of interpenetration can be better.

Visual cues tested in an experiment. The IH and OH standards were among the best and worst methods performancewise, respectively. FC and OC may increase subjective ratings, but 2H provided better performance and the best overall tradeoff.

Publications

- Mores Prachyabrued and Christoph W. Borst, “Design and Evaluation of Visual Interpenetration Cues in Virtual Grasping”, IEEE Transactions on Visualization and Computer Graphics, June 2016 (vol. 22 no. 6), pp. 1718-1731. (PDF)

- Mores Prachyabrued and Christoph W. Borst, “Visual Feedback for Virtual Grasping”, Proceedings of IEEE 3D User Interfaces (3DUI) 2014, pp. 19-26. (PDF) (Video)

- Mores Prachyabrued and Christoph W. Borst, “Effects and Optimization of Visual-Proprioceptive Discrepancy Reduction for Virtual Grasping”, Proceedings of IEEE 3D User Interfaces (3DUI) 2013, pp. 11-14. (PDF) (Video – Earlier Study) (Video – Newer Study)

- Christoph W. Borst and Mores Prachyabrued, “Nonuniform and Adaptive Coupling Stiffness for Virtual Grasping”, Proceedings of IEEE Virtual Reality 2013, pp. 35-38. (PDF) (Video)

- Mores Prachyabrued and Christoph W. Borst, “Virtual Grasp Release Method and Evaluation”, November 2012 (vol. 70 no. 11), International Journal of Human-Computer Studies, pp. 828-848. (PDF) (Video)

- Mores Prachyabrued and Christoph W. Borst, “Visual Interpenetration Tradeoffs in Whole-Hand Virtual Grasping”, Proceedings of IEEE 3D User Interfaces (3DUI) 2012, pp. 39-42. (PDF) (Video)

- Mores Prachyabrued and Christoph W. Borst, “Dropping the Ball: Releasing a Virtual Grasp”, Proceedings of IEEE 3D User Interfaces (3DUI) 2011, pp. 59-66. (“runner-up best paper”). (PDF)

- Christoph W. Borst and Richard A. Volz, “Evaluation of a Haptic Mixed Reality System for Interactions with a Virtual Control Panel”, Presence : Teleoperators and Virtual Environments, vol. 14 no. 6 (December 2005), pp. 677-696. (PDF)

- Christoph W. Borst and Arun P. Indugula, “A Spring Model for Whole-hand Virtual Grasping”, Presence : Teleoperators and Virtual Environments, vol. 15 no. 1 (February 2006), pp. 47-61. (PDF)

- Christoph W. Borst and Arun P. Indugula, “Realistic Virtual Grasping,” IEEE Virtual Reality 2005 conference, pp. 91-98, 320. (awarded VR2005 honorable mention), (link)

- Poster: Christoph W. Borst and Arun P. Indugula, “Whole-hand Grasping with Force Feedback,” IEEE WorldHaptics 2005.

- Christoph W. Borst and Richard A. Volz, “Preliminary Report on a Haptic Feedback Technique for Basic Interactions with a Virtual Control Panel,” EuroHaptics 2003 conference, pp. 1-13.

- Christoph W. Borst and Richard A. Volz, “Observations on and Modifications to the Rutgers Master to Support a Mixture of Passive Haptics and Active Force Feedback,” IEEE HAPTICS 2003, pp. 430-437. (link)

- IEEE VR Poster: Mores Prachyabrued and Christoph W. Borst, “Design and Evaluation of Visual Feedback for Virtual Grasp”, IEEE VR 2014, pp. 109-110.

Interactive Secondary Views: Volumetric Lenses, Windows, and Mirrors for VR (2005-2011)

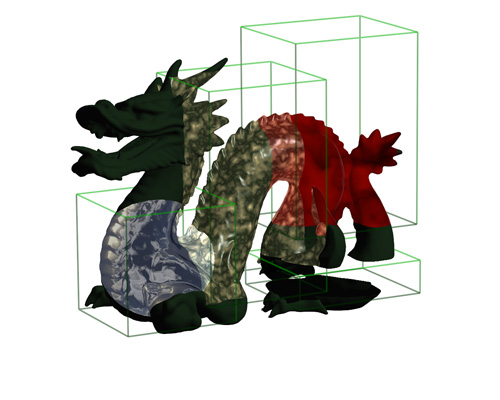

3D secondary views are analogous to 2D windows from desktop interfaces, including standard desktop windows, effects like magnifying lenses, or multiple viewports in a 3D modeling program. These provide ways for users to manage multiple views or visualization parameters without switching context (changing the entire view). We developed secondary view techniques for VR in the form of volumetric lenses, 3D windows, and 3D mirrors. A set of parameters controlled by the user provides a unifying framework for 3D volumes that behave like 3D volumetric lenses, clip windows, world-in-miniature views, and in other ways.

Volumetric Lenses

Research Assistants: Jan-Phillip Tiesel, Chris Best, Phanidhar Raghupathy

Volume lenses are 3D regions, such as boxes, that filter the content within them. Filtering can show a rendering style that differs from the rest of a 3D scene, or that shows content from an entirely different scene. We developed new lens types and new shader-based rendering methods to support multiple potentially-intersecting lenses in a single scene. We also developed interactive lens systems to support exploration of various scientific datasets (e.g., the Isaac Verot Coulee Watershed). Lenses can be moved by users to allow them to easily view, for example, a comparative dataset or to enhance features in selected regions.

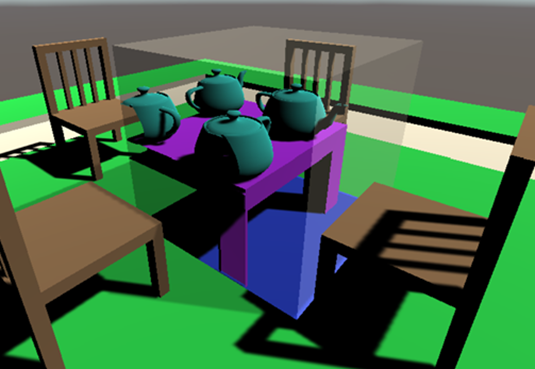

A lens (left) revealing an otherwise hidden world in place of the original world (right). Images from a VR Gems chapter by Woodworth and Borst.

Shader efffect viewer with composable lenses.

Shader efffect viewer with composable lenses.

3D Windows and Mirrors

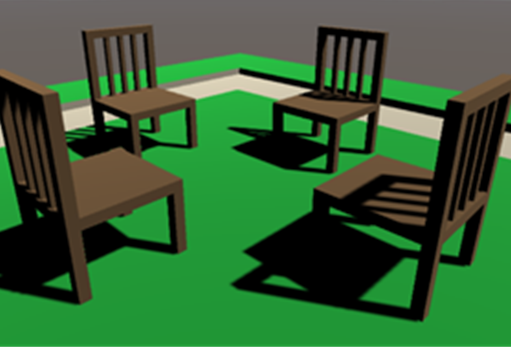

3D windows capture and replicate a portion of the environment or show different datasets or objects. Users can arrange windows in a scene to organize different datasets or get multiple convenient views of a scene (e.g., simultaneous zoomed-out and detail views). Users can reach into these windows and interact with objects inside just as they would in the original environment, with changes appearing in all views if desired.

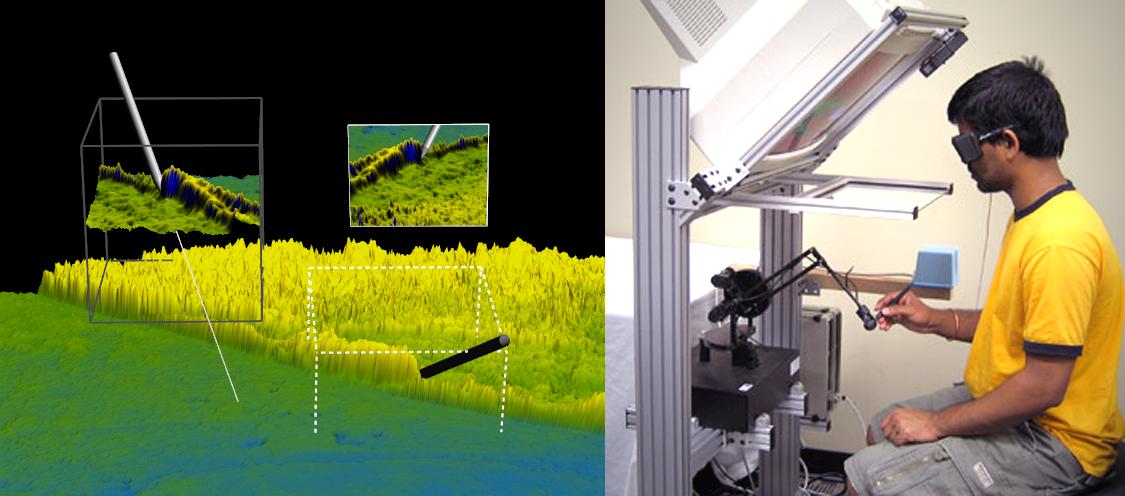

A 3D mirror is an example that contains an interactive mirrored version of a dataset. This may make it easier to understand or interact with objects occluded in the main view (e.g., to see the back faces of objects). We used the technique to allow users to trace paths through mountainous terrain while being able to see front and back views simultaneously, or to reach into the mirror to directly draw on back-facing features of surfaces when desired.

We compared different secondary view techniques for annotating terrain features (drawing along hills and valleys that included portions occluded in the main view). Results show the importance of interactive views (supporting reaching in rather than just providing visuals) and favor the use of 3D (volumetric) windows or mirrors over more conventional 2D windows or mirror configurations with rectangular frames. We also considered force feedback during the annotation, as discussed in Force Feedback for Drawing on Data Surfaces.

A study participant traces hills and valleys in mountainous terrain, using 3D mirrors and windows. The secondary views provide front and back views simultaneously without users needing to pause to adjust view.

Also see the Secondary Views videos: Single-pass 3D Lens Rendering and Spatiotemporal Time Warp Example (IEEE VR 2010 and extended in 2011 IEEE TVCG), Secondary Views Experiment (ISVC 2011), Composable Volumetric Lenses (IEEE VR 2008 and IEEE TVCG 2010), and 3D Windows in Geosciences Exploration System (CGVR 2007)

Selected Publications

- IEEE TVCG 2011 paper describing composable 3D lens rendering with spatiotemporal effects (video) (doi)

- ISVC (Visual Computing) 2011 paper investigating secondary views in a multimodal VR environment (PDF) (doi)

- IEEE VR 2010 paper showing our single-pass 3D lens rendering with a spatiotemporal time warp example (doi)

- IEEE TVCG 2010 paper on the real-time rendering of composable 3D Lenses for interactive VR (doi)

- ACM SIGGRAPH 2009 describing single-pass rendering of composable volumetric lens effects (doi)

- GCAGS 2009 paper discussing the use of lenses on cross-cutting geological datasets composed of LIDAR imagery (link)

- IEEE VR 2008 paper discussing a new rendering approach for composable volumetric lenses (doi)

- CGVR 2007 paper detailing volumetric window applications, rendering techniques, and performance evaluations. (PDF) (dblp)

3rd Person Pointing in a Mirror World

Research Assistants: Jason Woodworth

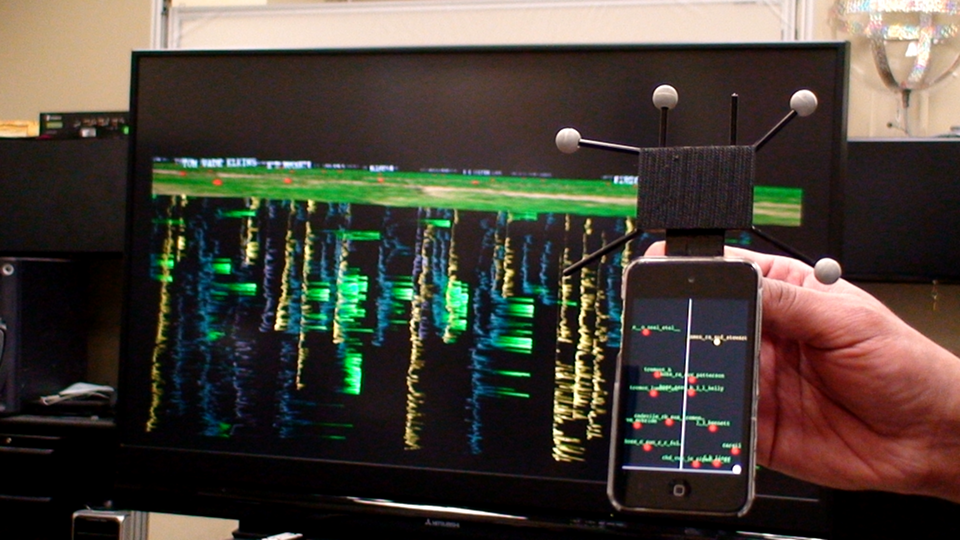

Accurate pointing is integral for communication in collaborative virtual environments. During the development of Kvasir-VR, in which a teacher is given a third-person view of themselves on a large TV and has to point in the environment, we noted large errors in teacher pointing, leading to miscommunication and confusion among students. We developed several visual feedback techniques to increase accuracy while allowing the teacher to remain unencumbered. Multiple studies were performed to determine the best technique, test if stereoscopy aided pointing, and gain information about pointing in a mirrored third-person perspective. Results show that the techniques increase both pointing accuracy and precision compared to non-enhanced pointing and that stereoscopy is not a sufficient alternative to provide such improvement. Results also give general insight into how users point given a third-person view of themselves, such as how long users take for ballistic vs. corrective motions.

A user demonstrating a virtual mirror pointing technique in a testing environment (left) and as a teacher in the Virtual Energy Center (right). Kinect tracking is used to determine a pointing direction and a visual aid is displayed to help the user direct their pointing to a desired target.

Touch Interfaces for VR and Visualization

Research Assistants: Mores Prachyabrued, Nicholas Lipari, Prabhakar V. Vemavarapu, and David Ducrest

Before consumer VR controllers had touch sensing, we integrated touch interactions into a cohesive smartphone-based VR controller (version with and without 6-dof tracking). Touch surfaces offer new interaction styles and also aid VR interaction when tracking is absent or imprecise or when users have limited arm mobility or fatigue. Newer VR controllers will include increasing touch sensing that may be used to navigate menus or perform other interactions.

Handymap used the touch surface for object selection to address occlusions that were problematic for standard ray pointing approaches. Reduced aiming precision requirements make it useful for low-cost VR setups due to modest tracking precision and small display sizes. A user study found the Handymap technique to be promising in comparison to standard ray pointing when selecting occluded, distant, or small targets in a geosciences application.

Additionally, in Handymenu, the touch surface is split into two areas: one for menu interaction and the other for spatial interactions such as VR object selection, manipulation, navigation, or parameter adjustment. See our section on Smartphone-based VR Menus (Handymenu) for more information.

Another touch interface method considers a two-sided handheld touch device and “back-of-the-device” interaction. By dividing both surfaces into halves, the four touch areas enable 3D object selection, manipulation, and feature extraction using combinations of simultaneous touches. The top surface provides primary or fine interactions, and the bottom surface allows secondary or coarse interactions.

Two android phones attached back to back. The top surface shows the interface. A user holding the interaction device with both the hands to interact with a dense virtual environment.

Also see our Handymap Selection Interface Video.

Publications

- Prabhakar V. Vemavarapu and Christoph W. Borst, “Evaluation of a Handheld Touch Device as an Alternative to Standard Ray-based Selection in a Geosciences Visualization Environment”, Workshop on Off-the-Shelf Virtual Reality (OTSVR) at IEEE VR 2013. (PDF)

- Mores Prachyabrued, David Ducrest, and Christoph W. Borst, “Handymap: A Selection Interface for Cluttered VR Environments Using a Tracked Hand-held Touch Device”, ISVC (Visual Computing) 2011, pp. 45-54. (PDF)

Menus in VR

Pointer-based Menus (2008-2010)

Research Assistant: Kaushik Das

Keeping in mind different types of VR hardware, software, and applications, we developed a customizable menu interface and conducted experiments to optimize menu characteristics (size, layout type, placement, auto-scale). Based on this work, we developed a menu library for integration of hierarchical menus in Virtual Reality systems. Versions have been implemented for three different rendering engines: OpenGL, OpenSG, and OpenSceneGraph.

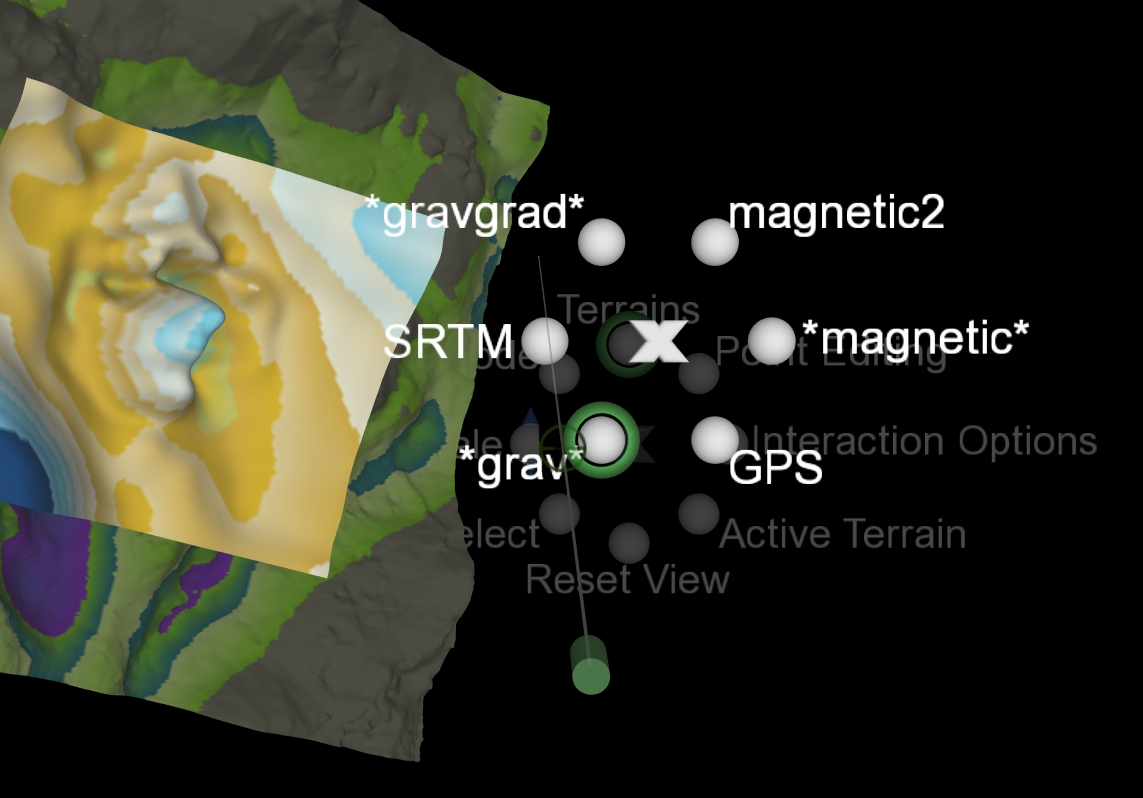

Second-level menu for dataset selection in a geosciences visualization system. Users activate and arrange multiple datasets from the same geospatial location.

Also see our VR Menu Demonstration Video.

Handymenu: Smartphone-based VR Menus (2013-2015)

Research Assistants: Mores Prachyabrued, Nicholas Lipari, and David Ducrest

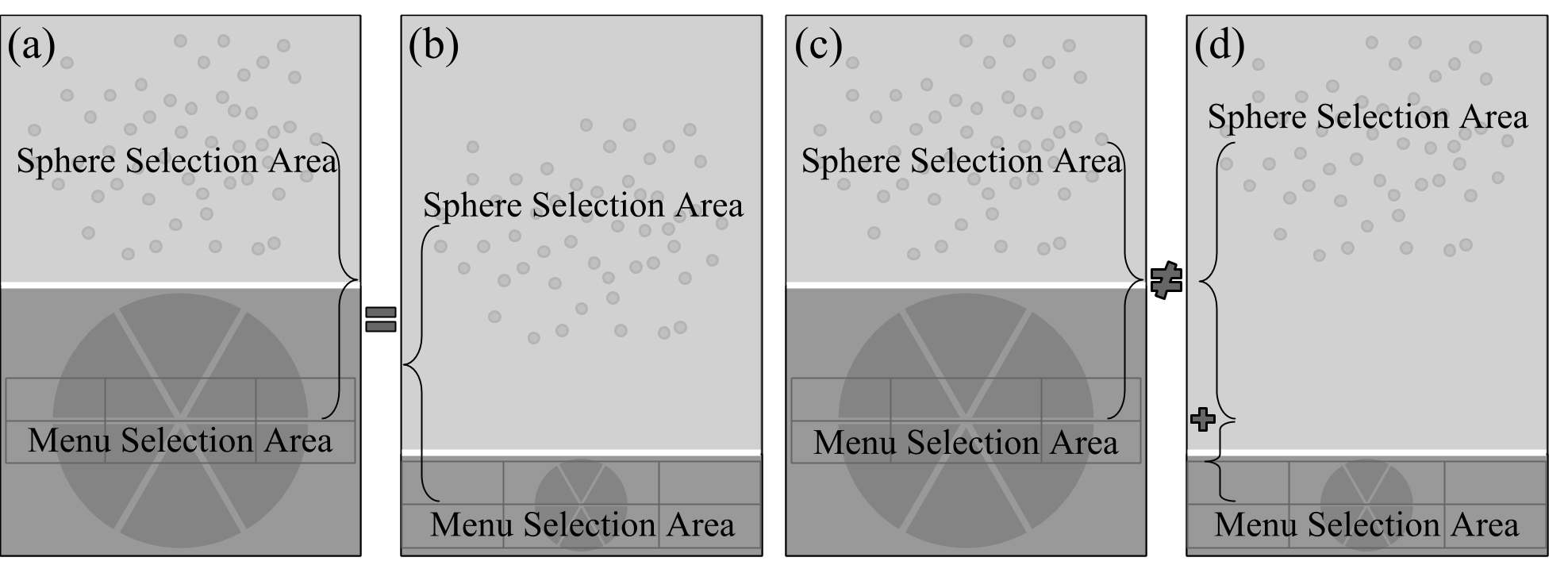

We integrated touch menus into a smartphone-based VR controller. Smartphone touch surfaces offer new interaction styles and aid VR interaction. Our technique does not require specialized tracking (which can often be absent or imprecise) and mitigates the effects of limited arm mobility or fatigue. The touch surface is split into two areas: one for menu interaction and the other for object selection, manipulation, or navigation. Users switch between the two areas and perform nested, repeated selections. A formal experiment compared tradeoffs and user performance related to VR object selection techniques (ray and touch), menu area sizes, menu selection techniques (ray and touch), and menu layouts (pie and grid).

Smartphone-based interactions with onscreen rectangular menu.

Smartphone-based interactions with onscreen rectangular menu.

Close-up view of touch interface with pie menu.

Close-up view of touch interface with pie menu.

Comparison of small and large experiment conditions with relative menu sizes.

Comparison of small and large experiment conditions with relative menu sizes.

Publications

- Nicholas G. Lipari and Christoph W. Borst, “Handymenu: Integrating Menu Selection into a Multifunction Smartphone-based VR Controller”, Proceedings of IEEE 3D User Interfaces (3DUI) 2015, pp. 129-132. (PDF) (Video)

- Kaushik Das and Christoph W. Borst, “An Evaluation of Menu Properties and Pointing Techniques in a Projection-Based VR Environment”, IEEE 3D User Interfaces (3DUI) 2010, pp. 47-50.

- Kaushik Das and Christoph W. Borst, “VR Menus: Investigation of Distance, Size, Auto-scale, and Ray-casting vs. Pointer-attached-to-menu”, to appear in Proceedings of ISVC (Visual Computing) 2010.

User Study of Stereoscopy and Lit Shading

Research Assistant: Nicholas Lipari

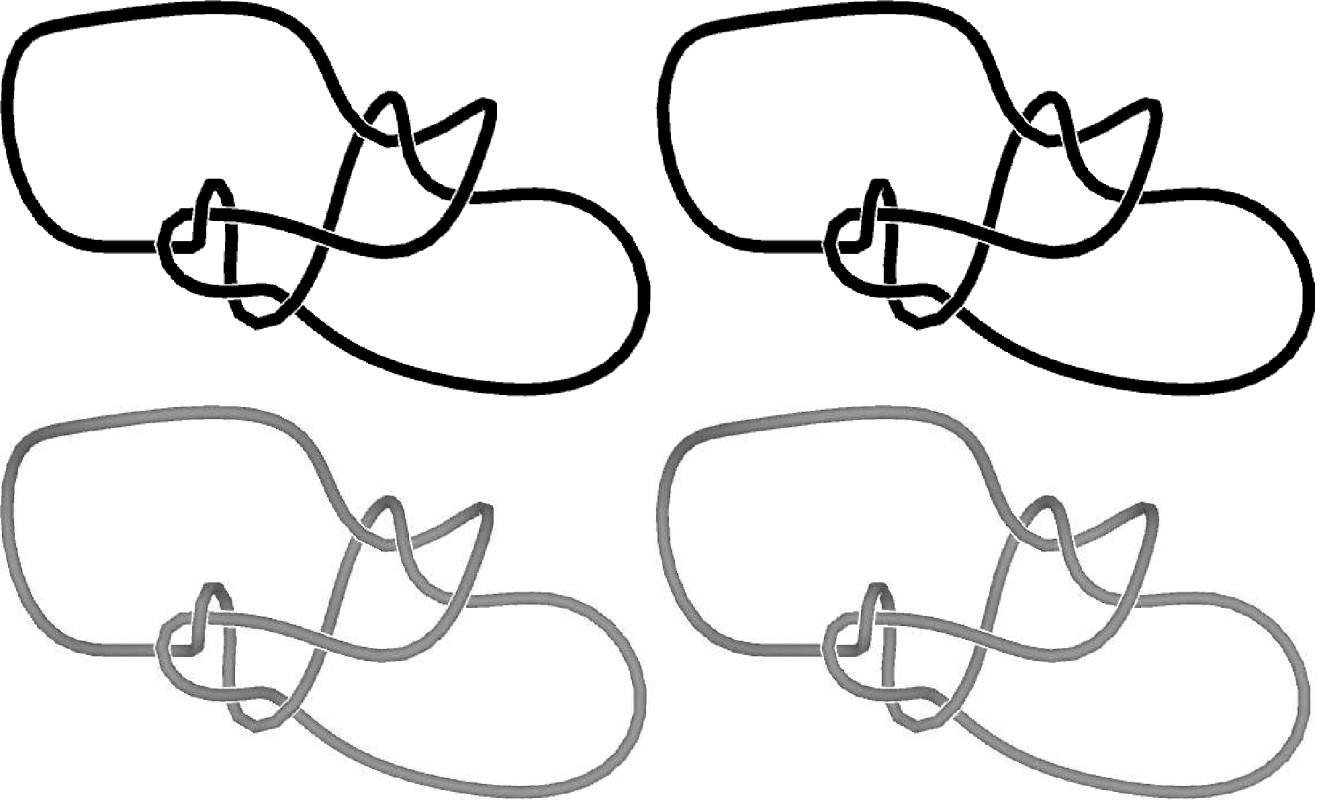

We conducted an experiment on depth cues in desktop virtual reality (fishtank VR). We evaluated stereoscopy and 3D lit shading for a counting task in knot visualization (counting crossings). Surprisingly, participants made significantly more errors with stereoscopic visuals than with monoscopic visuals. This contrasts evaluations of fishtank VR for other applications and provides knowledge about limitations of stereo imaging.

Stereo pairs of a knot with two different types of shading. These can be viewed with cross-eyed stereo free viewing (right eye image on left, left eye image on right).

Publications

- Nicholas G. Lipari and Christoph W. Borst, “Evaluation of Stereoscopy and Lit Shading for a Counting Task in Knot Visualization”, The 2007 International Conference on Computer Graphics and Virtual Reality, pp. 23-29. (PDF)