Networked Virtual Reality and Kvasir-VR

- Kvasir-VR: Networked VR Overview

- Teacher-guided Networked VR Field Trips

- Tiny House Walkthrough and Other Collaboration with High Schools

- Remotely-guided VR for Geosciences Interpretation

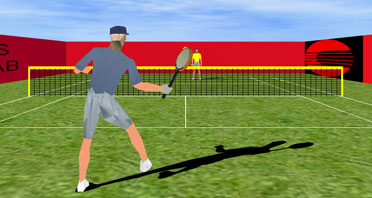

- Networked Virtual Tennis with Heterogeneous Displays

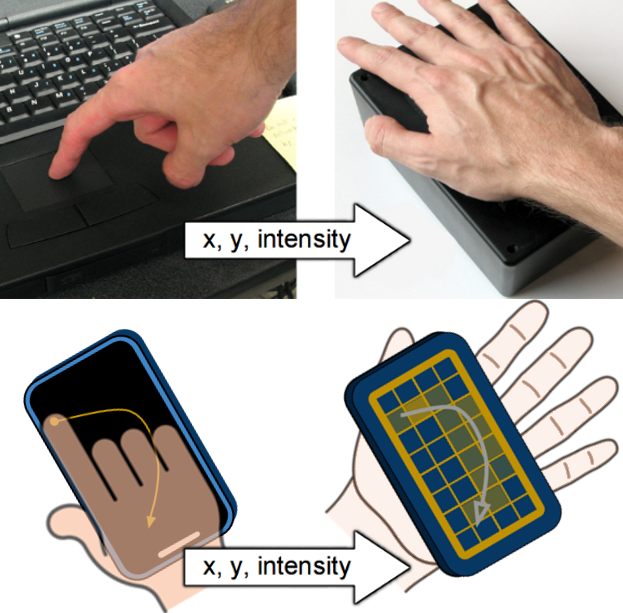

- Networked Touch with 2D Tactile Grids

- Networked robotics : Telepresence and Predictive Displays

Kvasir-VR: Networked VR Overview

“Kvasir-VR” refers to our networked collaborative VR approaches, and especially to those that let teachers guide students who are immersed in VR environments. Kvasir-VR enables immersed students to meet remote experts for guided activities, or to be guided and assisted more directly by their nearby teacher. Our most recent work captures a teacher using a 3D camera (Kinect) and streams the data over a network for incorporation into live VR field trips. Classroom-deployable VR stations and our specialized teacher interface allow us to bring the approaches “into the field” to study them in real classrooms. The following outline overviews Kvasir-VR:

- Networked VR Field Trip technology

- Live or prerecorded 3D teacher imagery streamed into VR, guiding immersed users

- Classroom-deployable VR stations

- Specialized practical interfaces for teacher and students

- Explored Applications

- Geosciences interpretation: Geologists remotely guiding an audience

- Solar energy education: VR field trips of a solar plant, deployed at high schools

- Tiny house walkthrough: streamed between two local schools

- VR tennis: preliminary work on networked asynchronous displays

- Many more education and workforce training applications possible

- Long-distance networked operation

- Lafayette to Austin TX at Smart Cities events, 2016 and 2017

- Lafayette to Adelaide, Australia, at launch of IgniteSA, Oct 2017 using LONI, Internet2, Pacific Wave, AARNET

- Lafayette to Chattanooga Downtown Library, June-July, 2018

- Related research fields and opportunities

- Asymmetric interface design for immersed and non-immersed user

- Effective educational techniques and architectures for VR

- Improving effectiveness with environment response to attentional cues (e.g., eye tracking and physiological data)

- Links and Awards

- Best Research Demo award at IEEE VR 2017

- 2016 US Ignite award for creative use of gigabit networks

- Post-Secondary Educator of the Year (2016) award related to VR education

- Press release: International demo between Lafayette and Australia

- Kvasir-VR summary description at US Ignite

- Kvasir-VR summary description at Mozilla Pulse

- Funding: NSF, Louisiana BoRSF, US Ignite, Mozilla

Teacher-guided Networked VR Field Trips (2014+)

We applied Kvasir-VR field trip approaches in the Networked Virtual Energy Center application to allow a live remote teacher to guide students through a virtual solar plant. A standalone version with recorded 3D teacher clips can also provide the field trips, independently. Our most recent experiment studied the effects of the live (networked) vs. pre-recorded (standalone) approaches. The system has been deployed to hundreds of students in high school classrooms, and to thousands through outreach events. Major demonstrations of the the live version included interstate and intercontinental operation using high-performance research networks such as Internet2.

Images

A remote teacher guides students from a large TV interface with a Kinect mounted at its bottom. Visuals include: 1) a mirror view of the environment, 2) synchronized student views at the TV’s lower left, 3) webcam views of students, in ovals hovering at positions for teacher to make eye contact with the students, and 4) pointing cues to help the teacher point correctly in 3D.

A student wearing a head-mounted display stands at a virtual tower overviewing a virtual solar plant (the background duplicates the student’s view for illustration). A teacher (seen to the left) provides an introduction to the plant and is represented by a mesh captured by a Kinect depth camera.

Video accompanying a paper submission to the IEEE VR 2018 conference : “Teacher-Guided Educational VR: Assessment of Live and Prerecorded Teachers Guiding Field Trips”. The paper describes the “Kvasir-VR” framework for embedding teachers into VR and assesses live (networked) and prerecorded teachers in high schools. The teacher imagery is from a depth camera (Kinect), so it looks rougher than green screen techniques, but it is 3D and does not require a special background.

Selected Publications

- IEEE VR 2018 paper overviewing Kvasir-VR and evaluating networked and standalone approaches. (PDF) (Video)

- IEEE Workshop paper (Everyday Virtual Reality 2017) discussing a TV-based teacher interface and evaluating visual pointing cues. (PDF) (DOI)

- IEEE VR 2017 demo abstract about the teacher-student collaboration interface. Winner of the “Best Research Demo” award. (PDF) (Video) (DOI)

- ISVC (Visual Computing) paper describing techniques for mesh streaming, rendering, and the teacher’s interface. (PDF) (DOI)

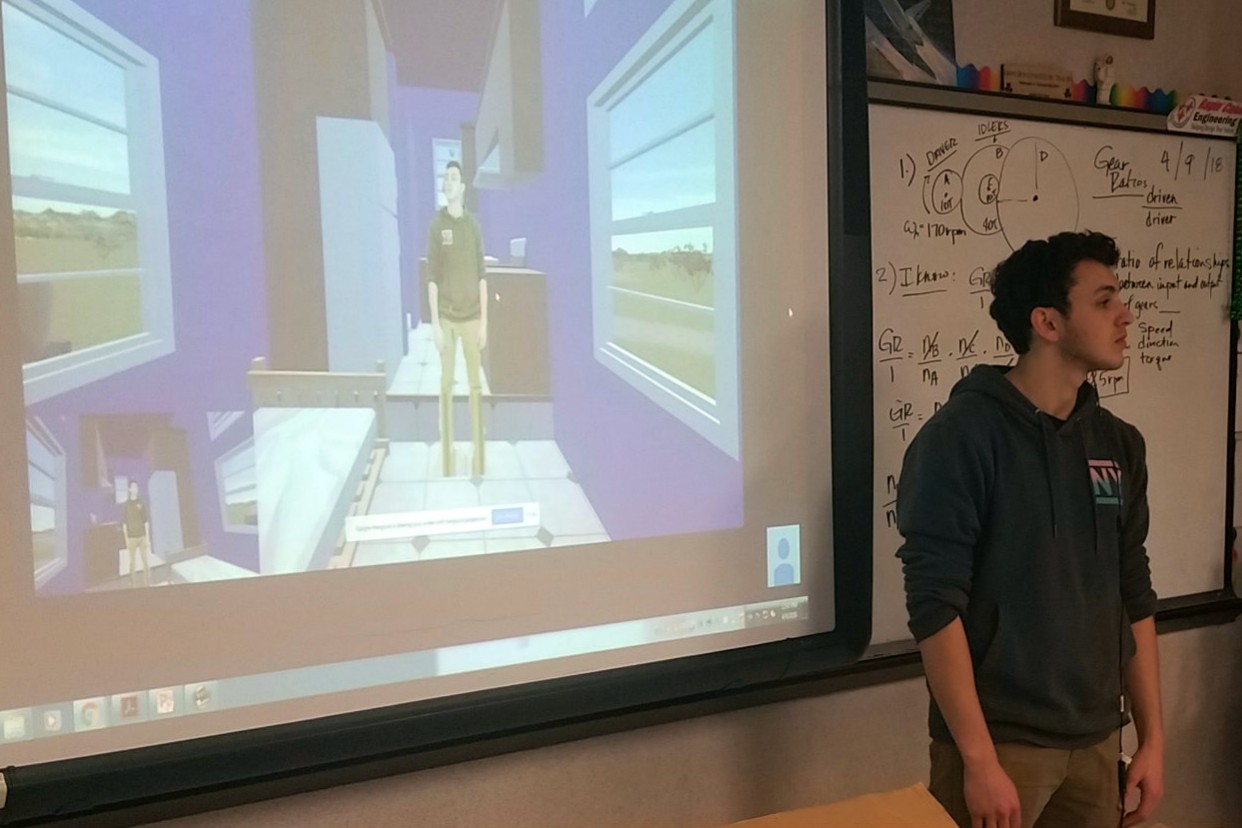

Tiny House Walkthrough and Other Collaboration with High Schools (2017-2018)

With funding from the Mozilla Foundation, we brought our approaches to educational VR applications envisioned by high school faculty and students. We set up Kvasir-VR between two schools, allowing a student at one school to remotely guide a VR walkthrough of a tiny house to explain its purpose and features. The high school students are involved in constructing the real tiny house. Our mentoring of students and other project activities are further documented in our Mozilla Gigabit Community Fund Blog.

Local high school student Andre Garcia remotely guides a Carencro High student through his virtual Tiny House model.

Our project and department were featured in the following wrap-up documentary from Mozilla and AOC Community Media.

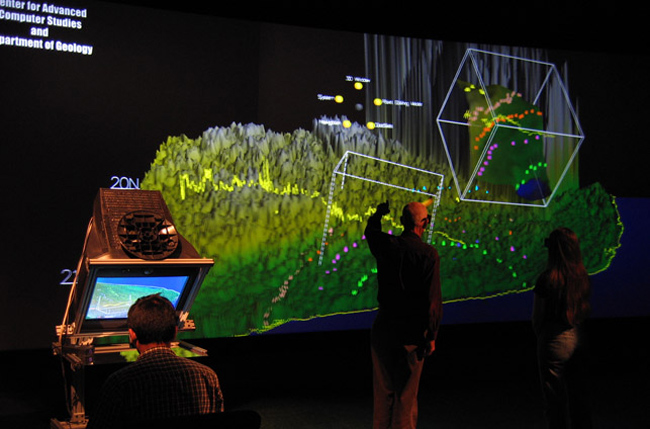

Remotely-guided VR for Geosciences Interpretation (2005+)

Research Assistants: Kaushik Das, Jan-Phillip Tiesel, Vijay Baiyya, Chris Best, Adam Guichard

Overview

This application of networked VR and asynchronous displays allowed a geologist to use a low-cost desktop VR display to remotely guide users immersed by head-mounted displays or large projection displays. The geologist guided viewers through live interpretations of various datasets associated with the Chicxulub impact crater area, which is believed to be related to sudden mass extinctions. A proposed expansion of this project to national research networks received support from US Ignite in 2014 and then became the Networked Virtual Energy Center project above.

Remotely-guided VR with the multiple networked display types was demonstrated at a special session at the GCAGS 2006 conference (link), using local networking. Our first deployment that connected separated (remote) sites was at a 2006 conference of the Independent Association of Drilling Contractors, during which our VR lab was connected to a large-scale visualization facility (LITE).

Geological study of an impact crater with networked asynchronous displays for remotely-guided VR interpretation. Users annotate data surfaces and check several datasets for correspondence. The picture was taken during CGACS 2006 setup and later appeared in the Handbook of Virtual Environments (2nd Edition, 2014).

Also see our videos Composable Volumetric Lenses and 3D Windows in Geosciences Exploration System.

Collaborative Interpretation in Geo-sciences Datasets

A followup on a previous geosciences data visualization tool, this application immerses multiple users in the Chicxulub impact crater for exploration and interpretation using Vive Pro eye-tracked HMDs. To fit the new immersed view, new menu interaction and collaborative tools were developed.

Movement, terrain state, and annotations are shared across users to enhance cooperation in interpretation tasks. Users can find each other in the environment through a system of tethers that link in-world nametags to their avatar locations. Gaze locations are shared through gaze trails to allow communication about specific points on the terrain.

The system has been demonstrated to VR experts at a conference and a local geology professor for review and interpretation.